AI Optimisation Tools, Agencies, and Platforms: What Actually Gets Cited (and Why)

- 19 hours ago

- 12 min read

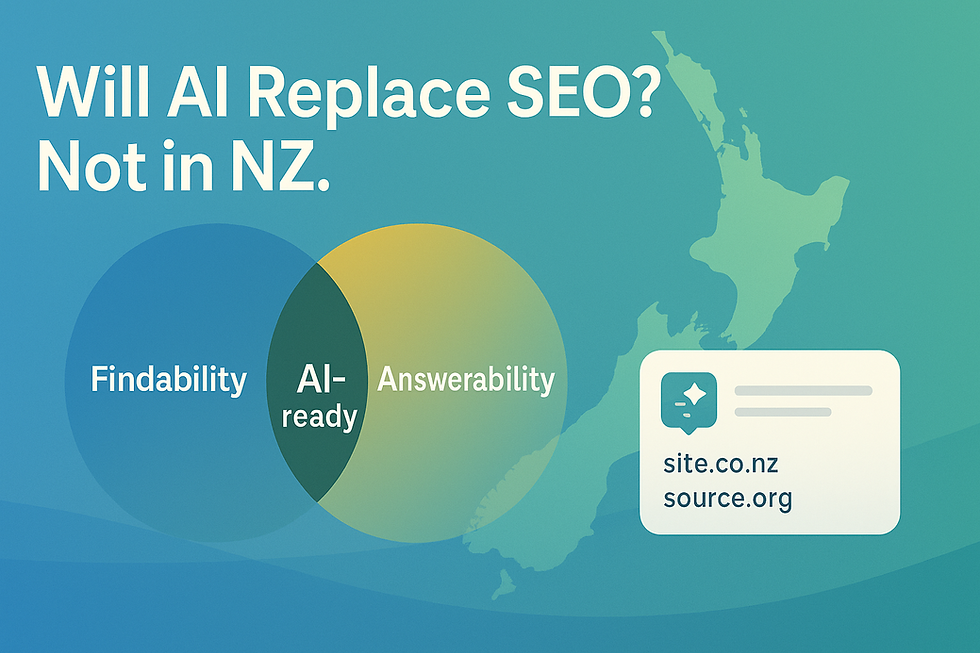

AI search has changed what visibility looks like.

In traditional search, visibility meant ranking on a results page.

In AI search, visibility means being cited inside an answer.

That shift has created confusion.

Businesses now see tools, agencies, and platforms all claiming to offer “AI optimisation.” Some appear repeatedly in AI-generated answers. Others never appear at all even if they rank well in Google.

This article explains why.

It does not recommend providers. It does not rank tools. It explains the selection logic AI systems use when deciding what to cite.

If you understand that logic, it becomes much easier to see why some names keep appearing and others don’t.

Why “Being Cited” Is the New Visibility Standard in AI Search

AI systems no longer send users to ten blue links.

They bring information together into one response and sometimes reference the sources behind it. Those references are citations.

If you’d like a deeper breakdown of how AI mentions, citations, and recommendations differ, that’s explained in detail here: https://www.aioptimisation.co.nz/post/what-ai-mentions-actually-are-and-why-rankings-alone-don-t-create-them

For this article, what matters is simple: Visibility now happens inside the answer itself.

Ranking is about position on a page. Citation is about being included in the explanation.

And the AI optimisation market shows exactly how that inclusion pattern plays out.

How AI-Generated Answers Differ from Traditional Search Results

Traditional search engines rank pages.

AI systems bring information together and generate a single response.

That difference changes how markets are represented.

In a list of ten links, multiple providers can appear side by side. The user decides which one to explore.

In a generated answer, the AI decides which names to include and which to leave out.

Sometimes it attaches citations. Sometimes it names brands directly. Sometimes it describes a category without naming anyone at all.

In every case, the visibility happens inside the answer itself.

This is a structural shift.

Ranking is about position on a page. Citations, and mentions are about inclusion within the explanation.

In emerging markets like AI optimisation, that inclusion process becomes highly selective.

Why Ranking Alone No Longer Guarantees Visibility

Search Google for: “Who can help me optimise my business for AI search?”

You’ll see a full page of results.

Sponsored agencies.

Featured snippets.

Institutional guides.

Reddit threads.

YouTube tutorials.

Comparison articles.

Google displays the market.

Multiple providers can appear side by side. Even competing definitions of “AI optimisation” rank together.

Visibility is distributed across a page.

Now ask the same question in Gemini.

Instead of ten links, you receive one structured answer.

Gemini begins by defining the space: “Optimising for AI search — often called Generative Engine Optimisation (GEO) or Answer Engine Optimisation (AEO)…”

It then constructs categories:

Specialised AI SEO Agencies

Enterprise & B2B Consultants

Self-Service Tools

Within each category, it names only a small number of providers.

Dozens of ranking companies are reduced to a shortlist.

That’s the shift.

Traditional search ranks pages. AI search selects entities.

In Google, the market is displayed. In AI answers, the market is compressed, and compression creates winners and invisible players.

Inside those compressed answers, inclusion follows three conditions: entities are cited when they can be clearly classified, reinforced across independent sources, and confidently justified within the explanation.

That filtering effect becomes clearer when we examine how AI systems decide what to cite.

Established vs Emerging Markets in AI Answers

To understand why this matters, compare that to a mature category like CRM software.

Ask Gemini: “Best CRM software for small business.”

The response is confident and predictable.

It lists:

HubSpot

Zoho

Pipedrive

Monday CRM

Salesforce

No category invention. No terminology confusion. No blending of agencies, consultants, and tools.

CRM is stable.

The leaders are widely agreed upon. The language is consistent across sources.

AI reflects the market.

Now return to AI optimisation.

Gemini has to define the terminology. It has to create its own structure. It blends agencies, PR firms, consultants, and SaaS platforms into one answer.

AI isn’t simply reflecting consensus.

It’s constructing a usable version of a fragmented ecosystem.

That tells us something important.

AI optimisation is not yet a universally settled category. Its terminology and boundaries are still stabilising.

And when AI systems classify an emerging market, they also decide:

Which labels survive

Which providers fit cleanly into a category

Which names are strong enough to include

Which disappear

Ranking determines retrieval.

Classification determines inclusion.

In emerging markets, inclusion becomes far more selective which is exactly why some tools, agencies, and platforms get cited repeatedly, while others remain invisible.

How AI Decides What to Cite in a Classified Market

Once AI systems compress a fragmented market into a structured answer, the next decision is selective inclusion.

The question is not whether AI filters, but how it decides which entities remain.

Inside the AI optimisation space, three patterns consistently shape which names appear.

1. Repetition Across Independent Sources

Providers that appear repeatedly across independent sources are more likely to be cited.

This repetition doesn’t mean backlinks alone.

It means:

Comparison articles describing them similarly

Industry roundups referencing them consistently

Review platforms categorising them the same way

Educational content explaining them in comparable terms

When multiple sources describe a provider as, for example, an “AI search optimisation platform” or an “AEO agency,” that language pattern becomes stable.

AI systems recognise that stability.

Newer entrants often struggle here.

They may publish strong content on their own website, but without reinforcement across independent sources, there is no broader pattern to validate inclusion.

Inside AI answers, repetition becomes credibility.

2. Clean Category Fit

In emerging markets, classification determines visibility.

AI systems must decide:

Is this a tool? An agency? A consultancy? A platform layered onto SEO?

Providers that fit cleanly into a recognisable category are easier to include.

Inside the Gemini example, the market was divided into:

Specialised AI SEO Agencies

Enterprise & B2B Consultants

Self-Service Tools

Only providers that clearly aligned with one of those categories were named.

Those with blurred positioning, mixing tool language, consultancy language, and generic SEO terminology are harder to classify.

What cannot be classified cleanly is less likely to be cited.

In compressed answers, ambiguity is costly.

3. Justifiable Consensus

AI systems favour names they can justify.

You can see this in the phrasing of AI-generated answers:

“Commonly used platforms include…”

“Industry-recognised agencies such as…”

“Well-known tools in this space…”

This language signals something important.

Inclusion requires defensibility.

Inside the AI optimisation market, that often results in:

Established SEO platforms with AI features appearing in tool-based answers

Agencies appearing primarily on hire-intent queries

Software with strong third-party coverage being cited more frequently than niche startups

This doesn’t necessarily mean those providers are better.

It means they are easier to justify.

AI systems reduce risk by citing names that appear to reflect broader agreement.

The Pattern in Plain Terms

Across AI answers, the same filtering logic operates:

Repetition → Clear classification → Justifiable inclusion.

In mature markets, that pattern reinforces already dominant leaders.

In emerging markets like AI optimisation, it shapes who becomes visible in the first place.

Small differences in positioning can determine whether a provider is included or omitted entirely.

The clearest place to see this inclusion logic is in how AI answers structure the market itself.

How AI Separates Tools, Agencies, and Platforms

One of the most revealing patterns in AI answers about AI optimisation is how the market gets divided.

When asked who can help optimise for AI search, systems like Gemini do not return a single undifferentiated list.

They classify.

In the example we examined earlier, the market was divided into:

Specialised AI SEO Agencies

Enterprise & B2B Consultants

Self-Service Tools

That classification process matters.

Because once AI creates categories, inclusion happens inside those categories not across the entire market.

What AI Treats as an “AI Optimisation Tool”

In AI answers, tools are typically described as:

Software products

Platforms with defined feature sets

Self-service systems

Add-ons to established SEO platforms

These often include:

SEO platforms that have introduced AI visibility features

AI grading tools

Citation monitoring tools

Structured content optimisation software

What separates tools from agencies in AI answers is clarity of function.

A tool performs a defined task. It can be described in terms of features.

It has a product identity independent of a founder or consulting team.

That clarity makes tools easier to cite in structured lists.

In compressed AI answers, tools often dominate “what should I use?” queries because they fit neatly into a product category.

What AI Treats as an Agency or Consultancy

Agencies appear differently.

They are more likely to be included when the query signals hiring intent:

“Who can help me…”

“Best agencies for…”

“Which consultants specialise in…”

In those contexts, AI systems often:

Create a shortlist

Group agencies by focus or region

Emphasise experience or specialisation

However, agencies appear less frequently in general “how to optimise” queries.

Why?

Because those queries lean informational. Tools answer informational needs more cleanly. Agencies answer transactional intent.

That distinction shapes visibility patterns.

An agency ranking well for AI SEO does not guarantee it will be named in an informational AI answer.

Intent shapes inclusion.

Where Platforms Sit (And Why They’re Cited Differently)

Platforms, especially established SEO platforms occupy a unique position.

They are:

Recognised entities

Widely referenced across independent sources

Frequently covered in research and comparisons

Embedded in existing SEO workflows

When those platforms introduce AI-related features, they inherit existing authority.

AI systems already recognise them.

So when a query relates to AI optimisation tools, those platforms are often included even if AI visibility is only one part of their broader offering.

This is an important pattern inside emerging markets: Established entities with adjacent relevance often dominate early citations.

Not necessarily because they are the most specialised.

But because they are the most defensible.

Why Category Boundaries Matter

In a mature market like CRM software, the boundaries between product and consultancy are clear.

In AI optimisation, they are still fluid.

Some providers:

Offer software and services

Call themselves platforms but operate like agencies

Use multiple labels across different pages

When positioning fragments, classification becomes harder.

When classification becomes harder, inclusion becomes less likely.

AI systems favour providers that:

Fit cleanly into a category

Use consistent language across sources

Reinforce the same identity repeatedly

In a compressed answer, ambiguity is filtered out.

What This Means for Citation Patterns

When AI separates tools, agencies, and platforms, it narrows the field within each category, you are no longer competing with the entire market.

You are competing inside the category AI assigns you.

And if your positioning is inconsistent, you risk not being assigned clearly at all.

In emerging markets, categorisation is visibility.

In AI-generated answers, categorisation determines who gets cited and who disappears.

Which Types of Tools Get Cited Most Often (and Why)

Once AI systems classify the AI optimisation market into tools, agencies, and platforms, another filtering pattern becomes visible.

Not all tools are cited equally.

Some appear repeatedly across AI answers.

Others rarely appear at all, even when they rank in traditional search.

The difference isn’t capability alone.

It’s whether the provider has the structural signals that support citation.

Established SEO Platforms with AI Visibility Features

One consistent pattern across AI answers is the inclusion of established SEO platforms that have added AI-related capabilities.

These platforms already have:

Strong brand recognition

Broad third-party coverage

Consistent category positioning

Long-standing authority within search

When they introduce AI visibility features, they do not start from zero.

AI systems already recognise them as legitimate entities within the broader search ecosystem.

So when a query relates to AI optimisation tools, these platforms are often included even if AI optimisation is only one part of their offering.

This is not necessarily a judgement of specialisation.

It is a reflection of structural safety.

Established platforms are easier to justify.

Tools That Appear Across Multiple Sources

Another group that appears more frequently in AI answers includes tools that:

Are reviewed in comparison articles

Are mentioned in industry blogs

Are discussed in forums or roundups

Are described consistently across sources

This external reinforcement matters.

AI systems look for agreement across independent sources.

If multiple publications describe a tool in similar terms, for example, as an “AI visibility tracker” or an “AEO grading tool” that pattern strengthens inclusion.

Single-source visibility is rarely enough.

Cross-source corroboration increases citation likelihood.

Why Newer or Niche Tools Struggle to Be Cited

Emerging AI-native tools often face a structural challenge.

Even if technically strong, they may:

Have limited third-party coverage

Use inconsistent terminology

Blur category boundaries

Lack reinforcement across independent sources

Inside compressed AI answers, there is limited space.

Inclusion becomes competitive.

When AI must choose between:

A widely recognised platform

A well-reviewed specialist tool

A newer, less-referenced entrant

It will often select the names that are easiest to justify.

This does not mean newer tools lack value.

It means citation patterns favour recognisable, reinforced entities.

In emerging markets, this effect is amplified.

Because the category itself is still forming, AI systems default toward entities that appear stable within that formation.

Safe Defaults in Emerging Markets

Across AI answers about AI optimisation, a subtle pattern appears.

When uncertainty exists, AI systems lean toward safe defaults.

Safe defaults are:

Recognisable brands

Consistently categorised tools

Providers with broad ecosystem reinforcement

Platforms adjacent to established SEO workflows

This is risk minimisation.

In a mature market like CRM software, safe defaults are obvious and widely agreed upon.

In AI optimisation, safe defaults are still solidifying and during that solidification process, inclusion becomes uneven.

Some names begin appearing repeatedly.

Others remain invisible, regardless of ranking performance.

What This Reveals About Citation Behaviour

When we step back, a pattern becomes clear.

AI answers about AI optimisation tools are not purely merit-based.

They are structure-based.

Tools that are more likely to be cited are:

Repeated

Clearly classified

Independently reinforced

Easily justifiable

Tools that lack those signals are less likely to appear, even if they rank in search.

Inside AI-generated answers, defensibility outweighs novelty.

In emerging markets, defensibility determines visibility.

Which Agencies Get Cited - and When

Agencies appear differently in AI answers than tools.

They are not treated as feature sets.

They are treated as providers of expertise.

That changes when and how they are included.

Agencies Appear Primarily on Hire-Intent Queries

When a user asks:

“Who can help me optimise for AI search?”

“Best AI SEO agency?”

“Which consultant specialises in AEO?”

AI systems are more likely to include agencies by name.

These are transactional or decision-stage queries.

The user is looking for a provider so inclusion becomes appropriate.

But when the query is informational:

“How do I optimise for AI?”

“What is AI search optimisation?”

“How does GEO work?”

Agencies appear far less frequently.

Instead, AI systems lean toward:

Tools

Platforms

Institutional sources

Educational explainers

This is not a judgement of capability, it’s a reflection of intent alignment.

AI answers prioritise the format that best matches the query type.

Common Traits of Agencies That Appear Repeatedly

Across AI answers about AI optimisation agencies, a pattern becomes visible.

The agencies that appear most often tend to:

Use stable, consistent category language

Be described similarly across independent sources

Appear in comparison articles or industry roundups

Be referenced beyond their own website

They are not necessarily the largest.

But they are the most reinforced.

Agencies with:

Clear specialisation

Consistent terminology

Cross-source presence

are easier for AI systems to justify including.

Why Most Agencies Never Appear

Many agencies:

Rank for AI SEO terms

Publish content about AI optimisation

Offer relevant services

And still never appear in AI-generated answers.

This often happens because:

Their category positioning shifts across pages

They are described inconsistently across platforms

They lack reinforcement from independent sources

They are new to the ecosystem

Inside compressed AI answers, there is limited space.

When inclusion is selective, agencies compete not just on quality but on structural clarity and reinforcement.

Ranking alone does not guarantee inclusion.

In emerging markets, agencies without cross-source validation are often filtered out.

What Rarely Gets Cited - Even When It Ranks Well

One of the most misunderstood patterns in AI visibility is this:

Ranking does not guarantee inclusion.

In fact, some of the most common page types that rank for AI optimisation terms rarely appear inside AI-generated answers.

This isn’t accidental.

It reflects how AI systems evaluate reuse risk.

Promotional or Opinion-Led Pages

Pages written primarily to promote services often rank well.

They may target “AI SEO agency” or “AI optimisation services” and perform strongly in traditional search.

But inside AI answers, they are cited far less frequently.

Why?

Because AI systems favour:

Explanatory content

Neutral framing

Consensus-backed language

Promotional copy is harder to reuse.

It often contains positioning claims that are not independently reinforced.

In compressed AI answers, unverified claims increase risk.

Vague Category Pages

Another common pattern in the AI optimisation space is category ambiguity.

Many pages describe services using overlapping language:

AI SEO

GEO

AEO

AI visibility

AI search marketing

Sometimes all within the same page.

While this may reflect experimentation in a new market, it creates classification friction.

When terminology shifts within a single provider’s ecosystem, AI systems struggle to assign a stable category.

When classification becomes unclear, inclusion becomes less likely.

Clarity does not just support ranking. It supports citation.

Single-Source Authority

Some providers publish excellent content.

They define terms clearly. They explain AI search well. They offer structured insight.

But if that clarity exists only on their own domain, without reinforcement elsewhere, citation frequency remains limited.

AI systems look for corroboration.

When only one source describes a provider in a particular way, that description remains isolated.

When multiple independent sources repeat it, it becomes a pattern.

And AI cites patterns.

Inconsistent Entity Positioning

This is particularly relevant in emerging markets.

If a provider is described across platforms as:

A digital marketing agency

An AI consultancy

A growth partner

A technology advisor

…without reinforcing a single stable category, AI systems receive fragmented signals.

Fragmentation reduces classification confidence and reduced classification confidence lowers citation likelihood.

Inside compressed AI answers, ambiguity is filtered out.

The Pattern Behind Omission

When we step back, a clear theme emerges.

AI systems do not cite based solely on:

Keyword optimisation

Content volume

Page ranking

Claims of expertise

They cite based on:

Structural clarity

Cross-source reinforcement

Category stability

Justifiable consensus

In emerging markets like AI optimisation, these signals are still consolidating.

Which means omission is common.

Many providers rank. Far fewer are cited.

And that gap is not random.

It is structural.

How AI Optimisation Is Being Defined in Real Time

The AI optimisation market is not just being marketed.

It is being classified.

AI systems are actively shaping how this category is understood by:

Defining terminology

Constructing subcategories

Selecting which providers represent each group

Repeating certain names across answers

In mature markets, AI reflects consensus.

In emerging markets, AI helps form it.

That formation process determines:

Which labels stabilise

Which providers become representative

Which approaches gain reinforcement

Which disappear from the answer layer entirely

Visibility in AI search is no longer only about ranking pages.

It is about becoming structurally legible within a category that is still being defined.

In that environment, citation is not accidental.

It is the outcome of repetition, clarity, and structural agreement across the ecosystem.

Understanding how AI systems classify and cite this emerging market makes it easier to see where visibility is being formed and why some names become embedded in answers while others remain outside them.

Comments